Random thoughts

Friday, May 24, 2019

Happy birthday, www.ibm.com!

Happy birthday, www.ibm.com, and welcome to the Quarter Century Club!

Related links:

- IBM

- IBM homepage history in screenshots by IBM's first corporate webmaster, Ed Costello

Labels: ibm, technology, webdevelopment

Thursday, March 28, 2019

Switching password managers: PowerShell to the rescue

|

| Photo credit: Image by Jan Alexander from Pixabay |

The integration with Firefox deteriorated when legacy extensions were dropped from the browser, and the new RoboForm extension never quite reached the same ease of use and consistency in the user interface. Time had come to look into alternatives, and the choice was a combination of KeyPass, the the popular open source product, and 1Password, an the enterprise ready solution that supports shared vaults for families and teams.

The migration seemed easy: RoboForm does have CSV export capabilities, although somewhat hidden in the latest version, and 1Password claims the ability to import RoboForm CSV files, only after a few attempts the results were mixed, to say the least. Some userids ended up in the password fields, and multiline notes were interpreted as tags. Clearly something wasn't right. 1Password support explained that the format seems to have changed recently, with RoboForm now exporting cards with the fields ordered as

whereas 1Password expects

That's where my new affection for PowerShell comes into play. This would have entirely doable in REXX, Perl, Python or any other language I have used for reformatting data, but parsing and generating CSVs can be tricky to implement or require additional modules. Not so in PowerShell, where the conversion from an arbitrarily ordered CSV with headers is a simple one-liner:

And voilà, all data automagically ends up in the right fields.

Labels: powershell, security, technology

Thursday, May 17, 2018

WeAreDevelopers 2018 conference notes – Day 2

Related links

Labels: austria, technology, wearedevs, webdevelopment

Wednesday, May 16, 2018

WeAreDevelopers 2018 conference notes – Day 1

Registration was surprisingly fast and painless, a Graham roll and an energy drink as developer breakfast maybe slightly too clichéic (or I am getting old), but fortunately there was plenty of coffee available all day, including decent cappuccino at one of the sponsor booths.

Asked at the conference opening what topics people would be most interested in hearing about, Blockchain came out first, followed by machine learning and, still, devops.

Steve Wozniak rocked the Austria Center with an inspiring “fireside chat”. Talking with the brilliant Monty Munford, The Woz answered questions submitted by the audience and shared his views on anything from the early days of computing and why being a developer was great then (“Developers can do things that other people can’t.”) to self-driving electric cars (overselling and underdelivering) and the Blockchain (too early, similar to the dot com bubble), interspersed with personal anecdotes and, as a running gag, promoting the Apple iCloud.

As a long-time mainframe guy, I liked his claimed his programming language skills too, FORTRAN, COBOL, PL/I, and IBM System/360 assembler, although he did mention playing more with the Raspberry Pi these days.

Mobile payments was a good example of the design principles that made Apple famous and successful. Steve mentioned how painful early mobile payment solutions were, requiring multiple manual steps to initiate and eventually sign off a transaction, compared to Apple Pay where you don’t even need to unlock your device (I haven’t tried either one, and they don’t seem to be too popular yet.)

The most valuable advice though was to do what you are good at and what you like (“money is secondary”), to keep things simple, and live your life instead of showing it off, which is why he left Facebook, feeling that he didn’t get enough back in return. For an absolutely brilliant graphical summary of the session, see Katja Budnikov’s real-time sketch note.

Johannes Pichler of karriere.at followed an ambitious plan to explain OAuth 2.0 from the protocol to to a sample PHP implementation in just 45 minutes. I may need to take another look at the presentation deck later to work through the gory details.

A quick deployment option is to use one of the popular shared services such as oauth.io or auth0.com, but it comes at the price of completely outsourcing authentication and authorization and having to transfer user data to the cloud. For the development of an OAuth server, several frameworks are available including node.oauth2 server for NodeJS, Sprint Security OAuth2 for Java, and the Slim framework for PHP.

In the afternoon, Jan Mendling of the WU Executive Academy looked at how disruptive technologies like Blockchain, Robotic Process Automation, and Process Mining shape business processes of the future. One interesting observation is about product innovation versus process innovation: most disruptive companies like Uber or Foodora still offer the same products, like getting you from A to B, serving food, etc. but with different processes.

Tasks can be further classified as routine versus non-routine, and cognitive versus manual. Traditionally, computerization has focused on routine, repetitive cognitive tasks only. Increasingly we are seeing computers also take on non-routine cognitive tasks (for example, Watson interpreting medical images), and routine manual, physical tasks (for example, Amazon warehouse automation).

Creating Enterprise Web Applications with Node.js was so popular that security did not let more people in, and there was no overflow area available either, so I missed this one and will have to go with the presentation only.

Equally crowded was Jeremiah Lee’s session JSON API: Your smart default. Talking about his experience at Fitbit with huge data volumes and evolving data needs, he made the case why jsonapi.org should be the default style for most applications, making use of HTTP caching features and enabling “right-sized” APIs.

Hitting on GraphQL, Jeremiah made the point that developer experience is not more important than end user performance. That said, small resources and lots of HTTP request s should be okay now. The debate between response size vs number of requests is partially resolved by improvements of the network communication, namely HTTP/2 header compression and pipelining, reduced latency with TLS 1.3 and faster and more resilient LTE mobile networks, and by mechanisms to selectively include data on demand using the include and fields attributes.

Data model normalization and keeping the data model between the clients and the server consistent was another important point, and the basis for efficient synchronizatiion and caching. There is even a JSON Patch format for selectively changing JSON documents.

Niklas Heidoff of IBM compared Serverless and Kubernetes and recommended to always use Istio with Kubernetes deployments. There is not a single approach for Serverless. The focus of this talk was on Apache OpenWhisk.

Kubernetes was originally used at Google internally, therefore it is considered pretty mature already despite being open source for only a short time. Minikube or Docker can be used to run Kubernetes locally. Composer is a programming model for orchestrating OpenWhisk functions.

Niklas went on to show a demo how to use Istio for versioning and a/b testing. This cannot be done easily with Serverless, which is mostly concerned about simplicity, just offering (unversioned) functions.

The workshop on Interledger and Website monetization gave an overview of the Interledger architecture, introducing layers for sending transactions very much like TCP/IP layers are used for sending packets over a network. Unlike Lightning, which is source routed so everyone has to know the routing table, Interledger allows nodes to maintain simply routing tables for locally known resources, and route other requests elsewhere

Labels: austria, technology, wearedevs, webdevelopment

Tuesday, September 9, 2014

Vienna DevOps & Security and System Architects Group meetup summary - Sept 9, 2014

Best practices for AWS Security

Philipp Krenn (@xeraa) nicely explained the fundamental risks of AWS services:Starting services on AWS is easy. So is stopping.

Recent incidents show that a compromised infrastructure can cause more than short disruptions. Several companies went out of business when not only their online services but also data stores and backups were gone:

- Code Spaces goes dark after AWS cloud security hack

- DrawQuest permanently shuts down after security breach

- Bonsai.io suffers from an AWS security incident

- Lock away the root account. Never use this account for service or action authentication, ever.

- Create an IAM user with a password policy for every service or action to limit damage in case an API key gets compromised.

- Use groups to manage permissions.

- Use two-factor authentication (2FA) using Google Authenticator.

- Never commit your credentials to a source code repository.

- Enable IP restrictions to limit who can manage your services even with an API key.

- Enable Cloudtrail to trace which user triggered an event using which API key.

The (fancy!) slides are available here: https://speakerdeck.com/xeraa/i-am-what-iam-for-devops-vienna

ISO 27001 - Goals of ISO 27001, relation to similar standards, implementation scenarios

Roman Kellner, Chief Happiness Officer :-) at @xtradesoft, gave an overview of the ISO 27001 and related standards:- ISO 27001:2013 Information Security Management System (ISMS) Requirements

- ISO 27002:2013 Code of Practice

- ISO 31000 Risk Management

The structure of ISO 27001 looks somewhat similar to ISO 9001 Quality Assurance, including the monitoring and continuous improvement loop of Plan-Do-Check-Act (PDCA).

For a successful implementation and certification, the ISO 27001 efforts must be supported and driven by the company leadership

The third talk about Splunk unfortunately had to be postponed.

Labels: cloud, events, itarchitecture, security, technology

Wednesday, July 2, 2014

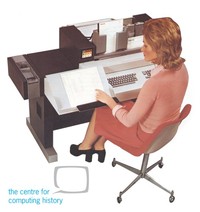

My first summer job and what's the deal with those magnetic ledger cards

The little I remember from those days are fixed working hours from 8–12 and 14–18, with sufficient time for a lunch break at home, handwritten memos, a plethora of documents arriving every few hours that needed to be stamped, sorted, numbered, processed and forwarded to the next department or stored in the archive, and the mix of historic and then-modern business machines.

My responsibilities were mostly sorting and archiving documents, and typing letters on an ancient mechanical Underwood typewriter.

The accounting system was eventually re-implemented on an IBM System/36 minicomputer, and later ported to the IBM AS/400. As a teenager who proudly owned a Commodore 64, these big irons were quite impressive and a motivation to know more about business computing, data modelling and programming languages. (I still have a copy of the COBOL 78 manual, just in case.)

In the thirty years since my first summer job, there have been tremendous changes. No longer do most of us work fixed working hours, rarely do we exchange handwritten memos, and data processing usually means instantaneously and electronically, not in paper batches.

I am grateful for what I learned during my first summer job and during my professional career since, and looking forward to the next big shifts ahead.

Photo courtesy of The Centre for Computing History - Computer Museum, http://www.computinghistory.org.uk/det/505/philips-p354-visible-records-computer/

Labels: personal, technology

Saturday, May 24, 2014

Happy Birthday, www.ibm.com!

When the World Wide Web was created 25 years ago few people probably realized how much change this would bring, not only to the academic community where this started but to the world at large.

Twenty years ago, IBM published the first homepage on www.ibm.com. The initial site on May 24, 1994 had only a few pages of content and an audio greeting by then-CEO and Chairman Lou Gerstner. (That was the time when most homepages greeted visitors with “Welcome to the Internet”.) Among the things Gerstner said, in retrospective the most important statement was “We are committed to the Internet, and we are excited about providing information to the Internet community”.

Back then I was happily coding System/370 mainframe applications and just had my first encounter with the now defunct Trojan Room Coffee Machine at the University of Cambridge. SNA and Token Ring were our preferred network technologies, and access to the Internet required special permission and signing an NSFnet Acceptable Use Policy document outlining the rules for commercial activities on international networks. Soon much of our business would become e-business.

Only a few years later was I invited to join the www.ibm.com team, a very fine, special team. At a time when business was mainly local, we were already globally integrated, collaborating electronically through an internal IRC network (Alister, remember our daily "gma, hay?" routine) and eventually the predecessor of IBM Sametime.

Last week the creators of the first homepage and some who worked in Corporate Internet Programs in the early days came together in New York City for an unofficial “motherserver meeting” to celebrate the anniversary. I missed the party, but the pictures brought back memories of the good times (and yes, occasionally bad times) we had running the IBM Website.

Happy Birthday, www.ibm.com!

Labels: ibm, technology

Friday, August 30, 2013

ViennaJS meetup: Veganizer, Enterprise Software Development, Responsiveview, Web components

- Veganizer: Having fun with image manipulation using canvas and vegetables (including a commercial for filepicker.io) https://github.com/franzenzenhofer/veganizer by @enzenhofer

- Enterprise Software Development for JavaScript refugees – Scala.JS (and not EJBJS 2.0, LOL) @rafacm @sebnozzi

- Responsiveview: http://rv.k94n.com/ https://github.com/k9ordon/responsiveview.

Other tools at http://responsinator.com/ http://lab.maltewassermann.com/viewport-resizer @thisisgordon - Web components: Cool talk by @nikgraf about HTML imports and more. http://www.x-tags.org/ can be used to enable Web components in current browsers already

Twitter hashtag: #viennajs

Twitter hashtag: #viennajsLabels: javascript, technology, webdevelopment

Wednesday, December 12, 2012

IT security beyond computers and smartphones

IT security is not just about computers and smartphones any more. Your smart TV may be allow attackers to get access to sensitive information and control the device, as security start-up ReVuln demonstrates for Samsung's Smart TV.

Once simple stand-alone receivers, TV sets, set top boxes and digital recorders are full featured computers and connect to home networks for downloading program guides and software updates, sharing pictures and videos and enabling social media integration.

Read more about recently discovered security flaws in home entertainment equipment on The Register.

Labels: security, technology

Wednesday, November 30, 2011

Velocity Europe 2011 conference report

Web companies, big and small, face the same challenges. Our pages must be fast, our infrastructure must scale up (and down) efficiently, and our sites and services must be reliable … without burning out the team.

Velocity Europe conference Website

Three years after its inception in California O’Reilly’s Velocity Web Performance and Operations Conference finally made it to Europe. Some 500 people, web developers, architects, system administrators, hackers, designers, artists, got together at Velocity Europe in Berlin on November 8 and 9 to learn about the latest developments in web performance optimization and managing web infrastructure, exchange ideas and meet vendors in the exhibition hall.

Three years after its inception in California O’Reilly’s Velocity Web Performance and Operations Conference finally made it to Europe. Some 500 people, web developers, architects, system administrators, hackers, designers, artists, got together at Velocity Europe in Berlin on November 8 and 9 to learn about the latest developments in web performance optimization and managing web infrastructure, exchange ideas and meet vendors in the exhibition hall.

Velocity Europe was well organized and run. There were power strips everywhere and a dedicated wireless network for the participants, although the latter barely handled the load when everyone was hogging for bandwidth. Seeing bytes trickling in slowly at a performance conference was not without irony. Some things never change: Getting connected sometimes requires patience and endurance. Back in the days I was volunteering at the W3C conferences preparation involved running cables and configuring the “Internet access room”, only then contention for network resources meant waiting for an available computer.

As expected for a techie conference, about the only people wearing jackets and ties were the AV operators, food was plentiful and good, and the sponsors handed out T-shirts, caps, and other give-aways. Plenary sessions were recorded and streamed live, and #velocityconf on Twitter also has a good collection of facts and memorable quotes for those who couldn’t attend in person.

Steve Souders and John Allspaw led through two busy days packed with plenary sessions, lighting talks and two parallel tracks on Web performance and Web operations. While bits and bytes certainly mattered to the speakers and the audience, the focus was clearly on improving the Web experience for users and the business aspects of fast and well-managed Web sites.

The conference started with a controversial talk about building a career in Web operations by Theo Schlossnagle, and I couldn’t agree more with many of his observations, from suggesting discipline and patience (and recommending martial arts to develop those virtues), learning from mistakes, developing with operations in mind to seeing security not as a feature but a mentality, a state of mind. Along the same lines, Jon Jenkins later talked about the importance of dev ops velocity, why it’s important to iterate fast, deploy fast, and learn from mistakes quickly, mentioning the OODA loop. Some of the Amazon.com deployment stats are just mind-boggling: 11.6 seconds mean time between deployments, and over 1,000 deployments in a single hour to thousands of hosts.

Joshua Bixby addressed the relationship between faster mobile sites and business KPIs. Details of the tests conducted and the short-term and long-term effects on visitor behaviour are also available in his recent blog post about a controlled performance degradation experiment conducted by Strangeloop. Another interesting observation was the strong preference of customers for the full Web sites over mobile versions and native apps: One retailer in the U. S. found that of the online revenue growth for that company was driven by the full site. 35% of the visitors on their mobile site clicked through to the full site immediately, 24% left on page 1, another 40% left after page 1, and only 1% bought something.

Performance also matters at Betfair, one of the world’s largest betting providers. Doing cool stuff is important too, but according to Tim Morrow’s performance pyramid of needs that’s not where you start:

- It works.

- It’s fast.

- It’s useful. (I personally have a slight preference for useful over fast.)

- It’s cool.

Jeffrey Veen of Hotwired, Adaptive Path, TypeKit fame kicked off the second day with an inspiring talk on designing for disaster, working through crises and doing the impossible. I liked the fancy status boards on the walls, and the “CODE YELLOW” mode, the openness and the clear roles when something bad happens. And something bad will happen, as John Allspaw pointed out: “You will reach the point of compensation exhausted, systems, networks, staff, and budgets.” A helpful technique for planning changes is to write down the assumptions, expectated outcomes and potential failures individually, and then consolide results as a group and look for discrepancies. If things still go wrong, Michael Brunton-Spall and Lisa van Gelder suggested to stay calm, isolate failing components, and reduce functionality to the core. Having mechanisms in place to easily switch on and off optional features is helpful, down to “page pressing” to produce static copies of the most frequently requested content to handle peak loads.

Several talks covered scripting performance and optimization techniques. Javascript is already getting really fast, as David Mandelin pointed out, running everything from physics engines to an H.264 decoder at 30 fps, as long as we avoid sparse arrays and the slow eval statements and with blocks. Using proven libraries is generally a good idea and results in less code and good cross-browser compatibility, but Aaron Peters made the point that using jQuery (or your favorite JavaScript library) for everything may not be best solution, and accessing the DOM directly when it’s simple and straightforward can be a better choice. Besides that, don’t load scripts if the page doesn’t need them – not that anyone would ever do that, right? – and then do waterfall chart analysis, time and again. Mathias Bynens added various techniques for reducing the number of accesses to the DOM, function calls and lookups with ready-to-use code snippets for common tasks.

For better mobile UI performance, Estelle Weyl suggested inlining CSS and JS on the first page, using data: URLs and extracting and saving resources in LocalStorage. Power Saving Mode (PSM) for Wi-fi and Radio Resource Control (RRC) for cellular are intended to increase battery life but have the potential to degrade perceived application performance as subsequent requests will have to wait for the network reconnection. Jon Jenkins explained the split browser architecture of Amazon Silk, which can use proxy servers on Amazon EC2 for compression, caching and predictive loading to overcome some of these performance hogs.

IBM’s Patrick Mueller showed WEINRE (WEb INspector REmote) for mobile testing, a component of the PhoneGap project.

Google has been a strong advocate for a faster Web experience and long offered tools for measuring and improving performance. The Apache module mod_pagespeed will do much of the heavy lifting to optimize web performance, from inlining small CSS files to compressing images and moving metadata to headers. Andrew Oates also revealed Google’s latest enhancements to Page Speed Online, and gave away the secret parameter to access the new Critical Path Explorer component. Day 2 ended with an awesome talk by Bradley Heilbrun about what it takes to run the platform that serves “funny cat videos and dogs on skateboards”. Bradley had been the first ops guy at YouTube, which once started with five Apache boxes hosted at Rackspace. They have a few more boxes now.

With lots of useful information, real world experiences and ideas we can apply to our Websites, three books signed by the authors and conference chairs, High Performance Web Sites and Even Faster Web Sites, and Web Operations: Keeping the Data On Time, stickers, caps and cars for the kids, Velocity Europe worked great for me. The next Velocity will be held in Santa Clara, California in June next year, and hopefully there will be another Velocity Europe again.

Related links

Photo credit: O´Reilly

Labels: events, javascript, metrics, networking, technology, webdevelopment

Friday, September 30, 2011

Goodbye, Delicious!

When AVOS took over, they promised Delicious would become “even easier and more fun to save, share, and discover”.

I haven’t quite figured out what the new site is about. All I can tell is that I am not interested in the featured stacks about synths and electronic music, 7 top articles on Michael Jackson, or Beyonce and beyond. I WANT MY BOOKMARKS!

One reason for using an online bookmarking service is the ability to share bookmarks between browsers and computers. Sure enough the site no longer works with Internet Explorer 8 at all and suggests that it might work better on Firefox.

Tag lists were temporarily broken. Search suggests fairly useless related tags (anyone in Vienna looking for dentists in London, Syracuse and Colorado?) Even bookmarking, the raison d'être of this site, doesn’t work well any more.

It’s obviously time to look for another bookmarking service while the Delicious export to save a bookmarks file locally still works.

Goodbye, Delicious!

Labels: technology, web2.0

Wednesday, April 27, 2011

Bookmarks on the move

AVOS acquires Delicious social bookmarking service from Yahoo!

After months of rumors about Delicious’ future, Yahoo! announced that the popular social bookmarking service has been acquired by Chad Hurley and Steve Chen, the founders of YouTube. Delicious will become part of their new Internet company, AVOS, and will be enhanced to become “even easier and more fun to save, share, and discover” according to AVOS’ FAQ for Delicious.

Delicious became well-known not only for its service but also for the clever domain hack when it was still called del.icio.us (and yes, remembering where to place the dots was hard!) Once called “one of the grandparents of the Web 2.0 movement” Delicious provides a simple user interface, mass editing capabilities and a complete API and doesn’t look old in its eighth year in service.

Let’s hope that the smart folks at AVOS will keep Delicious running smoothly.

PS. Current bookmarks should carry over once you agree to AVOS’ terms of use and privacy statement, keeping a copy of your bookmarks might be a good idea. To export/download bookmarks access https://secure.delicious.com/settings/bookmarks/export and save the bookmark file locally, including tags and notes.

Labels: technology, web2.0

Wednesday, February 9, 2011

Google vs. Bing: A technical solution for fair use of clickstream data

When Google engineers noticed that Bing unexpectedly returned the same result as Google for a misspelling of tarsorrhapy, they concluded that somehow Bing considered Google’s search results for its own ranking. Danny Sullivan ran the story about Bing cheating and copying Google search results last week. (Also read his second article on this subject, Bing: Why Google’s Wrong In Its Accusations.)

When Google engineers noticed that Bing unexpectedly returned the same result as Google for a misspelling of tarsorrhapy, they concluded that somehow Bing considered Google’s search results for its own ranking. Danny Sullivan ran the story about Bing cheating and copying Google search results last week. (Also read his second article on this subject, Bing: Why Google’s Wrong In Its Accusations.)Google decided to create a trap for Bing by returning results for about 100 bogus terms, as Amit Singhal, a Google Fellow who oversees the search engine’s ranking algorithm, explains:

To be clear, the synthetic query had no relationship with the inserted result we chose—the query didn’t appear on the webpage, and there were no links to the webpage with that query phrase. In other words, there was absolutely no reason for any search engine to return that webpage for that synthetic query. You can think of the synthetic queries with inserted results as the search engine equivalent of marked bills in a bank.Running Internet Explorer 8 with the Bing toolbar installed, and the “Suggested Sites” feature of IE8 enabled, Google engineers searched Google for these terms and clicked on the inserted results, and confirmed that a few of these results, including “delhipublicschool40 chdjob”, “hiybbprqag”, “indoswiftjobinproduction”, “jiudgefallon”, “juegosdeben1ogrande”, “mbzrxpgjys” and “ygyuuttuu hjhhiihhhu”, started appearing in Bing a few weeks later:

The experiment showed that Bing uses clickstream data to determine relevant content, a fact that Microsoft’s Harry Shum, Vice President Bing, confirmed:

We use over 1,000 different signals and features in our ranking algorithm. A small piece of that is clickstream data we get from some of our customers, who opt-in to sharing anonymous data as they navigate the web in order to help us improve the experience for all users.These clickstream data include Google search results, more specifically the click-throughs from Google search result pages. Bing considers these for its own results and consequently may show pages which otherwise wouldn’t show in the results at all since they don’t contain the search term, or rank results differently. Relying on a single signal made Bing susceptible to spamming, and algorithms would need to be improved to weed suspicious results out, Shum acknowledged.

As an aside, Google had also experienced in the past how relying too heavily on a few signals allowed individuals to influence the ranking of particular pages for search terms such as “miserable failure”; despite improvements to the ranking algorithm we continue to see successful Google bombs. (John Dozier's book about Google bombing nicely explains how to protect yourself from online defamation.)

The experiment failed to validate if other sources are considered in the clickstream data. Outraged about the findings, Google accused Bing of stealing its data and claimed that “Bing results increasingly look like an incomplete, stale version of Google results—a cheap imitation”.

Whither clickstream data?

Privacy concerns aside—customers installing IE8 and the Bing toolbar, or most other toolbars for that matter, may not fully understand and often not care how their behavior is tracked and shared with vendors—using clickstream data to determine relevant content for search results makes sense. Search engines have long considered click-throughs on their results pages in ranking algorithms, and specialized search engines or site search functions will often expose content that a general purpose search engine crawler hasn’t found yet.Google also collects loads of clickstream data from the Google toolbar and the popular Google Analytics service, but claims that Google does not consider Google Analytics for page ranking.

Using clickstream data from browsers and toolbars to discover additional pages and seeding the crawler with those pages is different from using the referring information to determine relevant results for search terms. Microsoft Research recently published a paper Learning Phrase-Based Spelling Error Models from Clickthrough Data about how to improve the spelling corrections by using click data from “other search engines”. While there is no evidence that the described techniques have been implemented in Bing, “targeting Google deliberately” as Matt Cutts puts it would undoubtedly go beyond fair use of clickstream data.

Google considers the use of clickstream data that contains Google Search URLs plagiarism and doesn't want another search engine to use this data. With Google dominating the search market and handling the vast majority of searches, Bing's inclusion of results from a competitor remains questionable even without targeting, and dropping that signal from the algorithm would be a wise choice.

Should all clickstream data be dropped from the ranking algorithms, or just certain sources? Will the courts decide what constitutes fair use of clickstream data and who “owns” these data, or can we come up with a technical solution?

Robots Exclusion Protocol to the rescue

The Robots Exclusion Protocol provides an effective and scalable mechanism for selecting appropriate sources for resource discovery and ranking. Clickstream data sources and crawlers results have a lot in common. Both provide information about pages for inclusion in the search index, and relevance information in the form of inbound links or referring pages, respectively.| Dimension | Crawler | Clickstream |

|---|---|---|

| Source | Web page | Referring page |

| Target | Link | Followed link |

| Weight | Link count and equity | Click volume |

Following the Robots Exclusion Protocol, search engines only index Web pages which are not blocked in robots.txt, and not marked non-indexable with a robots meta tag. Applying the protocol to clickstream data, search engines should only consider indexable pages in the ranking algorithms, and limit the use of clickstream data to resource discovery when the referring page cannot be indexed.

Search engines will still be able to use clickstream data from sites which allow access to local search results, for example the site search on amazon.com, whereas Google search results are marked as non-indexable in http://www.google.com/robots.txt and therefore excluded.

Clear disclosure how clickstream data are used and a choice to opt-in or opt-out put Web users in control of their clickstream data. Applying the Robots Exclusion Protocol to clickstream data will further allow Web site owners to control third party use of their URL information.

Labels: bing, google, microsoft, seo, technology, webdevelopment

Monday, January 24, 2011

IBM turns 100

The IBM Centennial Film: 100×100 shows IBM's history of innovation, featuring one hundred people who present the IBM achievement recorded in the year they were born, and bridges into the future with new challenges to build a smarter planet.

Another 30-minute video tells the story behind IBM inventions and innovations.

For more than twenty years I have not just worked for IBM but been a part of IBM. It has been a pleasure, and I certainly look forward to many more to come!

I am an IBMer.

Labels: business, ibm, innovation, technology

Sunday, November 7, 2010

How to fix the “Your computer is not connected to the network” error with Yahoo! Messenger

If you are like me and upgrade software only when there are critical security fixes or you badly need a few feature, you may have tried sticking to an older version of Yahoo! Messenger. I made the mistake of upgrading, and was almost cut off voice service for a few days. Fortunately, Yahoo! has a fix for the problem, which only seems to affect some users.

The new video calling capability in Yahoo! Messenger 10 didn't really draw my attention. Nevertheless I eventually gave in and allowed the automatic upgrade, if only to get rid of the nagging upgrade notice. At first everything seemed fine: The settings were copied over, and the user interface looked reasonably familiar. However, soon after, voice calls started failing with an obscure error message “Your computer is not connected to the network”. Restarting Yahoo! Messenger sometimes helped, but clearly this wasn't working as reliably as the previous version. “Works sometimes” wasn't good enough for me.

Yahoo! support was exceptionally helpful, within minutes the helpdesk agent had identified that I was running Yahoo! Messenger version 10.0.0.1270-us, which was the latest and greatest at the time but a few issues with voice telephony. He recommended uninstalling the current messenger and manually installing a slightly back-level version of Yahoo! Messenger 10, and disallow further automatic upgrades. The installation worked smoothly, and voice support in Yahoo! Messenger has been working flawlessly ever since.

Thank you, Yahoo! support.

Links:

Labels: networking, technology, windows

Monday, August 9, 2010

20 years Internet in Austria

On August 10, 1990, Austria became connected to the Internet with a 64 Kbit/s leased line between Vienna University and CERN in Geneva. Having Internet connectivity at one university didn’t mean everything moved to the Internet immediately.

On August 10, 1990, Austria became connected to the Internet with a 64 Kbit/s leased line between Vienna University and CERN in Geneva. Having Internet connectivity at one university didn’t mean everything moved to the Internet immediately.The first online service I had used was CompuServe in 1985 while visiting friends in the UK. Watching the characters and occasional block graphics slowly trickle in over an acoustic coupler at 300 baud transfer rate was exciting (and expensive for my host). Back home, the post and telecom’s BTX service and Mupid decoders promised a colorful world of online content at “high speed”, relatively speaking, but most of the online conversations still happened on FidoNet, which was the easiest way to get connected. “His Master’s Voice” and “Cuckoo’s Nest” were my favorite nodes. At university our VAX terminals in the labs continued to run on DECNet, as did the university administration system. We learned the ISO/OSI reference model and RPC over Ethernet, but no word of TCP/IP. At work my 3279 terminal eventually gave way to an IBM PS/2 with a 3270 network card, and some foresighted folks in our network group Advantis and in IBM Research started putting gateways in place to link the mostly SNA connected mainframes with the Internet. The BITFTP service Melinda Varian provided at Princeton University opened another window to the Internet world (belatedly, Melinda, thank you!)

Meanwhile Tim Berners-Lee and Robert Cailliau made the first proposal for a system they modestly called the World Wide Web in 1989, and further refined it in 1990.

I don’t recall when I got my first Internet e-mail address and access to the Internet gateways after signing agreements that I wouldn’t distribute commercial information over NSFNet and only use the Internet responsibly, but it was only in 1994 when I took notice of the first Website, the now defunct Trojan Room Coffee Machine at the University of Cambridge, and another year before I had my first homepage and my own domain. As many Websites those days would read, “Welcome to the Internet”.

Happy 20th anniversary to the Internet in Austria!

Related links:

- 20 Jahre Internet in at – Die Revolution fraß ihre Kinder (derstandard.at)

- 20 Jahre Internet in Österreich (Futurezone interview with Austrian Internet pioneer Peter Rastl)

- 20 Years of Internet in Austria/20 Years of ACOnet Infrastructure

Labels: austria, technology

Thursday, June 24, 2010

Human rights 2.0

Krone focused on freedom of the media, freedom of speech and data privacy in the European Union, pointing out that the Internet itself is not a mass medium but merely a communication channel that carries, amongst other things, media products: Individuals often gather information about others purely to satisfy their curiosity, and conversely share their personal information seeking for recognition. Companies mainly satisfy their business needs and sometimes manage to create “sect-like islands on the net like Apple does”, but generally lack the sensibility and awareness for data privacy needs. States need to balance the need for security and state intervention with the freedom of the people and basic rights.

In the following discussion, Krone suggested the Internet would eventually become fragmented along cultural or ideological borders, and Europe would have to build a European firewall similar to the Great Firewall in China (which uses technology from European IT and telecom suppliers). The audience strongly objected to the notion of a digital Schengen border, which goes against the liberal tradition in many European countries and doesn’t recognize the range of believes and the diversity within Europe.

Benedek talked about Internet governance and the role of the Internet Governance Forum (IGF), a “forum for multi-stakeholder policy dialogue”. Concepts for dealing with illegal activities and what is considered acceptable and appropriate encroachment upon basic rights such as those guaranteed by the European Convention on Human Rights (ECHR) vary between countries. Even more, what is illegal in one country may be perfectly legal and even socially accepted behavior elsewhere.

Touching on net neutrality and the digital divide, he mentioned that there is a push to make Internet access a human right and some countries have indeed added rights to participate in the information society to their constitutions. At the same time the copyright industry focuses on the three strikes model in the Anti-Counterfeiting Trade Agreement (ACTA) model as punishment for intellectual property violations.

ACTA is not the only threat to access for all though: Much content today is only available to people who understand English, and not all content is suitable for children or accessible to elderly people. How we can make the net accessible to people of all ages and qualifications, and in their native languages, remains a challenge.

Basic human rights, including the rights to education, freedom of speech and freedom of press, increasingly have a material dependency on the right to Internet access. As an audience member pointed out, “offline” studying at university is virtually impossible; long gone are the days of paper handouts and blackboard announcements.

Both speakers agreed that the right to privacy requires “educated decisions” by the people, and consequently educating people. The lectures and the following lively discussion last night served that purpose well.

Related links:

- Announcement “Menschenrechte 2.0 – Menschenrechte in unserer Informationsgesellschaft“

- Fonds zur Förderung der wissenschaftlichen Forschung (FWF)

- PR&D Kommunikationsdiensleistungen GmbH

Labels: education, events, privacy, society, technology